In 2025, artificial intelligence is actively reshaping offensive security across Web3. Attackers are leveraging generative models and automation to scale phishing, manipulate context, and probe smart contracts. As protocols increasingly integrate autonomous agents, AI becomes both a tool and a threat. This blog outlines how attackers are applying these techniques, presents supporting data, and provides recommendations for security-conscious organizations.

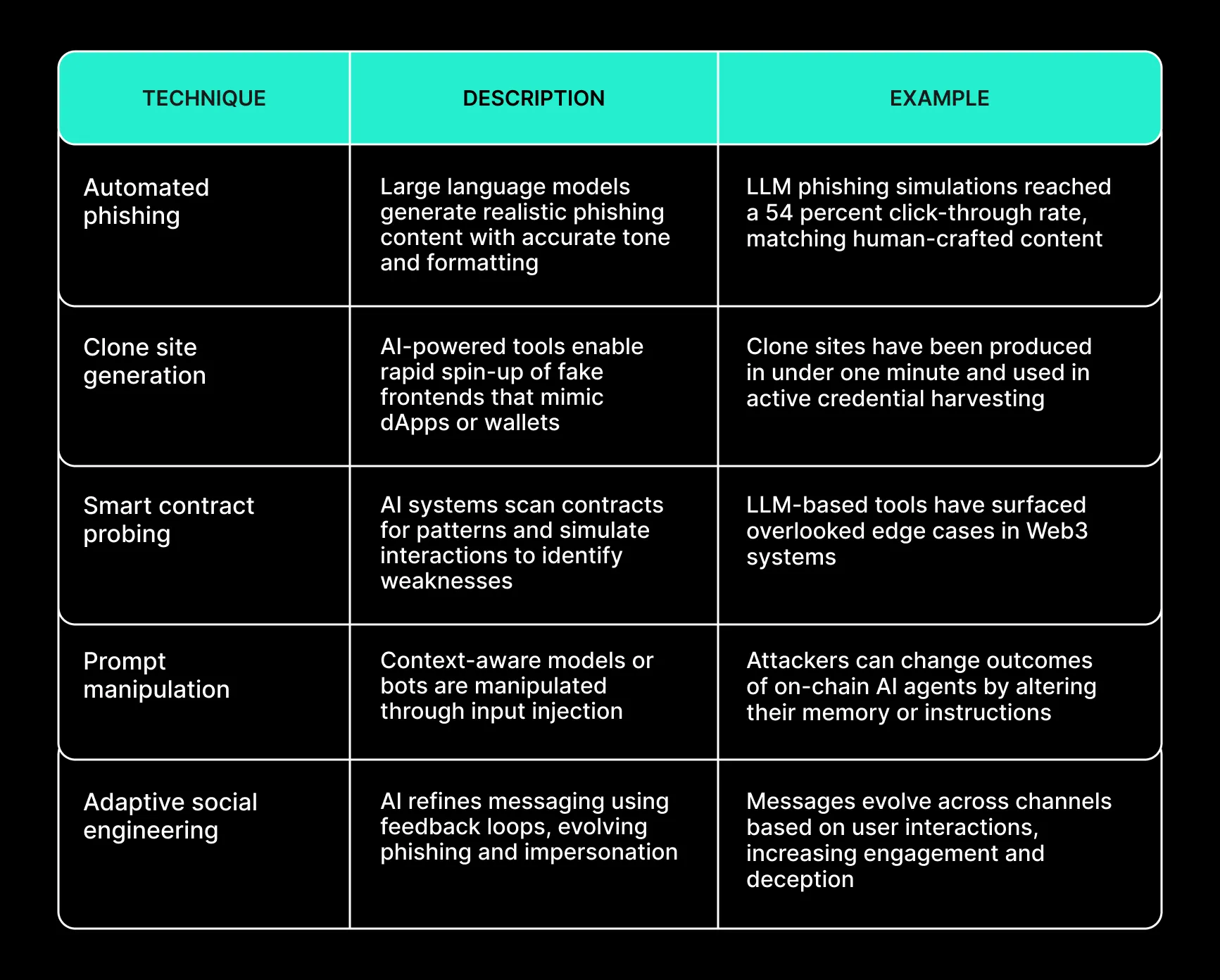

Common AI-Driven Attack Techniques

Industry Trends and Data Points

Web3 saw over $3.1 billion in losses from attacks in the first half of 2025. Phishing and social engineering accounted for more than $600 million. AI-driven threats have surged by over 1,000 percent year-over-year. Attackers now routinely deploy AI to bypass traditional spam filters, manipulate context, and clone entire sites in seconds. In autonomous systems, AI agents are already being exploited via context injection and logic redirection.

Researchers have documented the effectiveness of AI-enabled phishing. LLM-crafted emails match human-written ones in effectiveness while scaling to thousands of targets. Attackers are integrating these capabilities into social engineering workflows, automating discovery of targets and personalization of messages.

Unique Web3 Risk Factors

AI creates unique risks in Web3 environments:

- Transactions are irreversible and final, compressing the time window for detection and response

- Users interact with smart contracts through interfaces that can be cloned or impersonated

- Many protocols expose composable components and cross-contract logic that automation can probe efficiently

- Autonomous agents and AI-driven bots are now active participants in financial and operational workflows, creating new attack surfaces

Unlike traditional software systems, Web3 lacks centralized kill-switches, making proactive defense essential.

Recommendations for Defense

Security organizations should take the following steps to address AI-driven attack vectors:

- Implement anomaly detection systems using both rule-based and AI-based techniques

- Monitor domain registrations and front-end behavior to detect clones and phishing infrastructure

- Harden user flows by requiring out-of-band verification for high-value transactions

- Conduct adversarial testing against smart contracts and autonomous agents

- Regularly audit AI agents for prompt injection resilience and behavioral integrity

- Minimize permissions across all wallets, contracts, and systems

- Train internal teams and communities on evolving phishing formats and impersonation methods

- Develop incident response procedures that assume automated scale and speed

Conclusion

AI has significantly lowered the cost and increased the effectiveness of attacks in Web3. The ability to automate reconnaissance, messaging, interaction, and exploitation introduces a strategic shift in how adversaries operate. Organizations must respond with equally adaptive defenses. This includes continuous monitoring, proactive threat modeling, and architecture design that accounts for both human and machine adversaries. The pace of development in generative models and autonomous systems will continue to increase. Security practices must evolve accordingly to reduce risk in an AI-native threat environment.

If your organization is navigating these challenges, Spearbit offers deep, tailored security expertise to help you stay ahead of emerging threats.

Connect with our team to learn how we support high-stakes systems with high-context reviews and resilient security architecture.