Key Takeaways

- Model-driven agents are already live in DeFi and making autonomous execution decisions, but they often lack runtime constraints, logging, or fallback mechanisms, exposing new, high-impact failure modes.

- Static audits and testing provide a strong foundation. Still, it's often just as important to observe how deployed agents behave in real-world conditions, where adversarial inputs, unstable data, and rare edge cases can reveal issues that remain hidden in controlled environments."

- Spearbit is building tools and processes to stress-test agents pre-deployment, validate identity and intent, and help teams understand how agent logic behaves under pressure, well before these issues arise in production.

Introduction

DeFi systems are now making decisions about how capital moves, allocating, rebalancing, and withdrawing it, without requiring direct user input. They interpret user commands, protocol state, and market data to generate transactions. Execution is no longer tied to a single user action but shaped by logic that reacts to changing inputs. This creates risks that static analysis often misses. Unchecked assumptions, weak boundaries, and unclear conditions can lead to unintended asset movement. Spearbit is tracking these risks in live systems. In this article, we’ll cover where these systems are active, how they can fail, and what controls are being developed in response.

AI Agents in Production

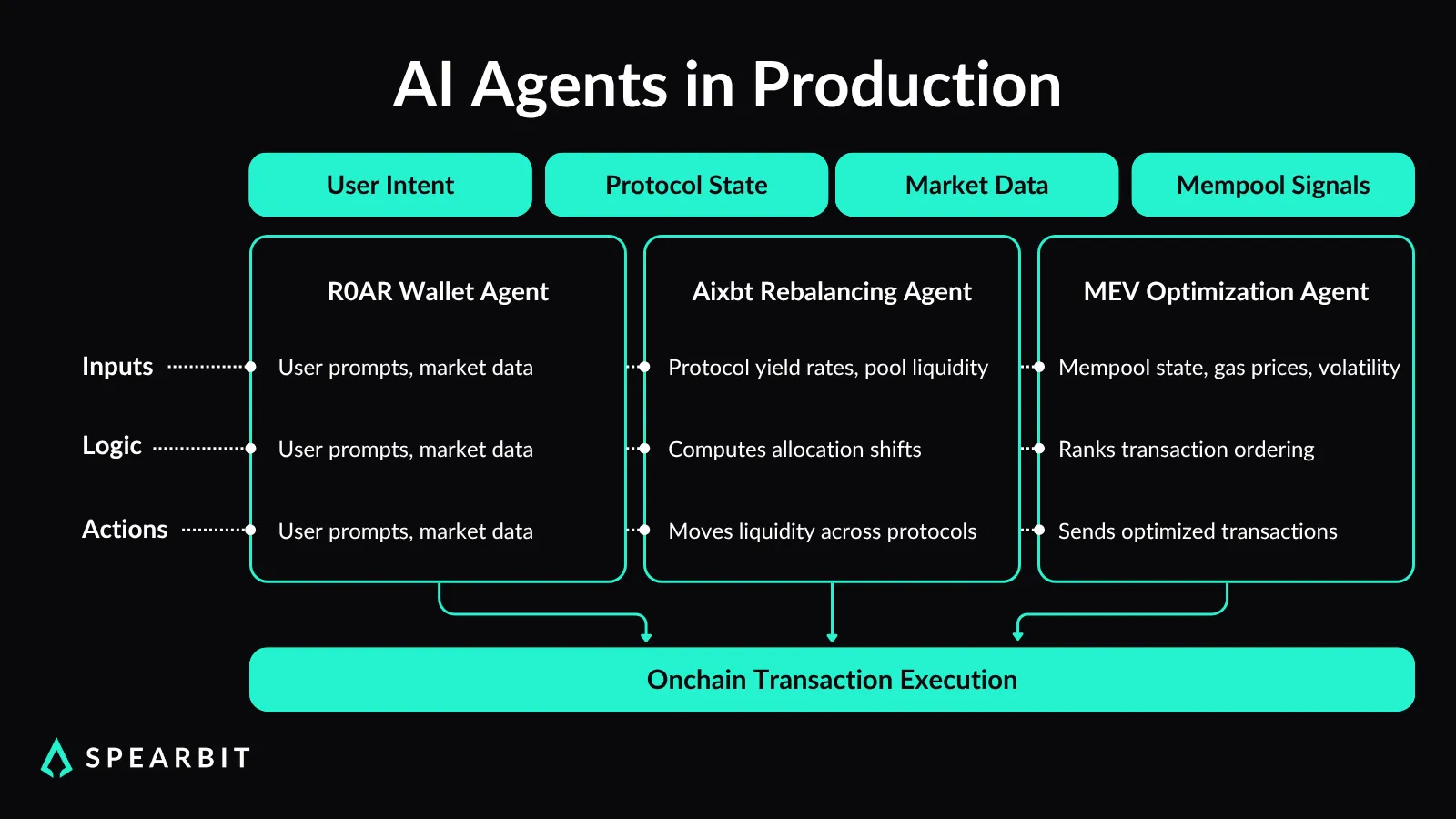

Autonomous agents are executing live trades, reallocating assets, and managing yield positions across DeFi protocols with minimal oversight. These systems employ model-based logic to evaluate liquidity conditions, assess protocol-specific risks, and determine execution paths based on real-time data.

Unlike rule-based automation, these agents operate with adaptive decision-making pipelines, often incorporating feedback from oracle feeds, mempool state, and historical volatility to inform their actions.

Recent deployments demonstrate the extent to which these agents have become integrated.

- R0AR has introduced a wallet interface backed by agents capable of parsing user inputs and initiating sequenced on-chain actions.

- Aixbt’s rebalancing system adjusts exposure across pools using signals derived from protocol behavior and market conditions.

- Some MEV bots incorporate model-based inference or algorithmic optimization to improve transaction ordering and gas estimation.

Each of these systems relies on inference processes that can shift outcomes based on timing, context, and prior state, making them fundamentally non-deterministic.

These deployments still lack consistent observability or execution controls. It’s common to see agents running without traceable input-output logs, policy-bound action limits, or structured failover mechanisms when facing adversarial or malformed inputs.

These edge conditions make it harder to anticipate how agents will respond without structured constraints or audit visibility. Without formal boundaries or validation layers in place, the operational safety of these systems depends heavily on assumptions that may not hold in production.

The Expanding Attack Surface

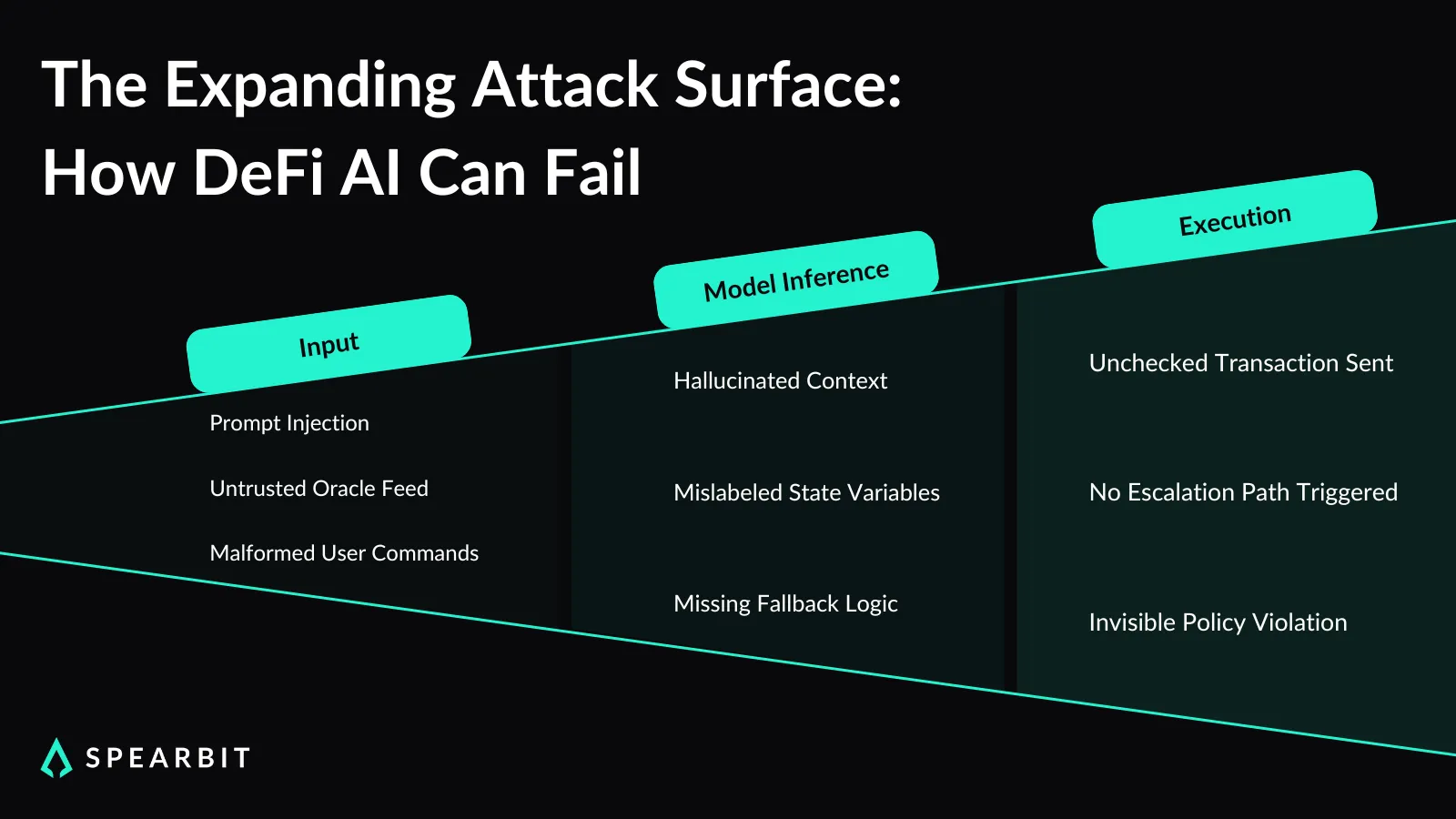

Model-Specific Vulnerabilities

Language model-driven agents introduce a range of new risks, many of which stem from untrusted or adversarial inputs. Prompt injection allows attackers to manipulate an agent’s interpretation of a task by inserting crafted text that alters the model’s output. These manipulations are often embedded in user-facing queries or external data sources, making them difficult to filter without strict input controls.

Agents can also generate outputs based on incorrect assumptions about system state. There are documented cases of models producing transaction parameters that reference non-existent balances or misread the behavior of target contracts.

When downstream systems accept these outputs with execution permissions, the agent may initiate transactions that don’t align with real conditions on-chain.

Control Gaps in Agent Integration

Some agent deployments lack fine-grained execution constraints. Once connected to a wallet or signer, agents typically operate with full transaction privileges and minimal guardrails.

There are a few mechanisms to scope access by function type, asset, or execution condition. This broad authority increases the impact of unexpected model behavior, especially under adversarial or ambiguous input conditions.

Fallback behavior is often undefined when a model returns malformed, incomplete, or unexpected output, the agent typically proceeds without escalation or halting the process. Many systems lack essential mechanisms, such as output schema checks, circuit breakers, or conditional execution constraints.

Without these, agents can act on unverified logic, and in some systems, the connection between agent input and resulting action may not be directly observable until after execution.

Security Incidents & Warnings

Challenges in Agent Behavior

As AI agents become more autonomous in DeFi systems, several classes of failure may emerge under production conditions. These are not always due to malicious interference but can stem from how agents interpret inputs or system state, especially when constraints, validation, or oversight are missing.

One plausible scenario could involve an agent generating a transaction that references a destination address it incorrectly inferred from input data. Without strict verification of routing logic, such a transaction could proceed even if the address has not been validated.

Another potential issue arises when an agent misinterprets protocol-defined allocation limits. For example, it could initiate a reallocation that exceeds the intended bounds due to inconsistent input formatting or outdated model assumptions.

If execution systems lack real-time checks or bounds enforcement, the transaction might still be processed. These failures do not require external compromise; they can result from legitimate agents acting on ambiguous, adversarial, or edge-case inputs.

Once deployed, agents may operate with broad transaction authority and minimal oversight. Without runtime safeguards like role checks, execution policies, or output validation layers, unintended behavior may go unnoticed until after execution.

What Security and Infrastructure Organizations Are Reporting

A recent survey of over 350 engineers and security teams reported consistent problems. 39% of respondents reported that agents performed actions outside their assigned scope. 33% saw data exposure from agents that accessed or output information without proper controls. Only 44% had enforcement systems in place that could limit what agents were allowed to do during runtime.

A lot of teams still rely on static tests and assumptions about how models will behave. These checks often overlook what happens in production, where inputs vary and decisions are made against live systems. Without enforcement tied to execution, agents can trigger transactions that violate policy, leak sensitive information, or divert funds, and there is no second chance once the block is signed.

Emerging Defenses

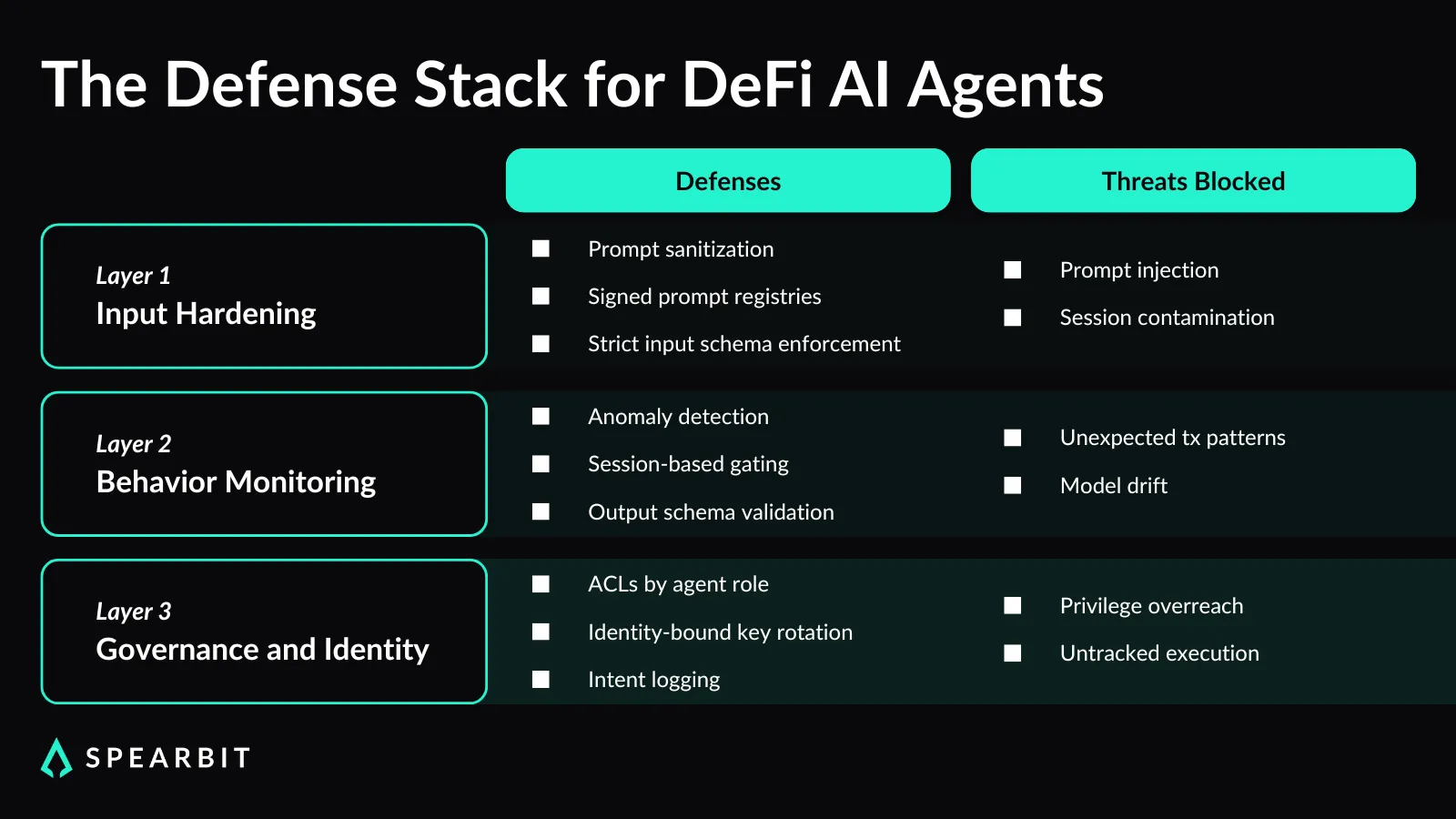

Monitoring and Detection

Security efforts around AI agents are shifting toward more active runtime visibility. Teams are starting to implement systems that verify agent identity at execution time and tie actions to specific context.

Time-based session controls are used to limit the duration during which an agent can act before reauthorization is required. These constraints help reduce unintended actions, primarily when agents operate across multiple tasks.

Anomaly detection is used to identify patterns that deviate from the typical behavior of a given agent. This includes tracking the size, timing, and structure of transactions.

Some teams are training models to detect when agent outputs deviate from baseline behavior in production. These systems are still in their early stages, but they offer a way to detect model drift or unusual execution before it has an impact.

While detection won't prevent every failure, it adds a layer of observability that is often missing. Without it, agent behavior is challenging to trace unless something goes wrong.

Input Hardening and Governance

Input validation is improving, teams are filtering prompts, enforcing strict input formats, and rejecting unexpected instructions before they reach the model. Some have begun signing prompt payloads to verify both structure and origin before execution. These checks help reduce the chance of prompt injection or cross-session contamination.

Governance controls are also starting to take shape, and teams are assigning specific permissions to agents based on their individual needs, rather than granting broad access.

Identity is being tied to agent function, and signing keys are being rotated to reduce long-term exposure. Roles are being split between the teams that design the agents, those that operate them, and those that authorize their deployment.

These defenses are becoming more common in environments where capital or trust is directly at risk. Adoption is still uneven, but the direction is clear: more visibility, more constraints, and less reliance on static validation.

What Spearbit Is Building Towards

Testing Agent Behavior Before Deployment

Spearbit is developing tools and workflows to evaluate how agents behave before they are deployed. The goal is to identify edge cases in which an agent responds when inputs are incomplete, unexpected, or intentionally manipulated. This includes red teaming prompts, checking execution boundaries, and creating test conditions that reflect production risk rather than ideal training scenarios.

We are also working on methods to tie agent behavior to identity and scope. That means being able to prove what an agent was supposed to do and checking whether the output stayed within those bounds. It’s not enough to confirm that a transaction was valid; we also want to verify that it aligns with the agent’s defined role and purpose.

Collaborating With Teams in Production

We’re working directly with teams to evaluate how their agents behave under load, in edge cases, and when coordination breaks down. This includes prompt structure, fallback logic, and how systems respond when the model output is malformed or incomplete. These are the areas where most issues show up, not during ideal conditions, but when the system is under pressure.

We’re also building adversarial test cases to help teams understand how agents react to unstable inputs, delayed responses, or unexpected data. These simulations help expose brittle logic and unclear execution paths. The goal is to help teams identify where the model is trusted too much and where constraints need to be clearer before deployment.

Conclusion

Systems that execute on-chain transactions without human review are already live. They respond to changing inputs through logic that isn’t easily verified with traditional tools. Certain behaviors only emerge under live conditions, where assumptions about input or scope may not hold as expected. Static checks aren’t enough; these systems need to be tested against unstable conditions and unexpected behaviors. Spearbit works with teams to define boundaries and simulate real-world stress. If you're deploying autonomous execution systems, Spearbit’s elite researchers can help you evaluate behavior under pressure before production makes it urgent.

Sources

- SailPoint Research Highlights Rapid AI Agent Adoption Driving Urgent Need for Evolved Security, Business Wire, May 28, 2025. businesswire.com

- R0AR Wallet Overview, R0AR. r0ar.io

- How AI Agent “AIXBT” Is Transforming Crypto Twitter And Trend Analysis, Forbes, January 6, 2025. forbes.com

FAQs

What is DeFi AI and how does it work?

DeFi AI refers to model-based systems that execute financial operations on-chain without direct user input. These agents interpret market data, protocol state, and user intent to submit transactions such as yield optimization, swaps, or position adjustments.

What are the risks of using AI agents in DeFi?

AI agents introduce risks not covered by traditional smart contract audits. These include prompt injection, hallucinated outputs, execution without proper constraints, and transactions based on incorrect or manipulated data.

How do AI agents fail in production environments?

Failures often occur due to unscoped permissions, malformed input, or ambiguous behavior under stress. These failures are not always visible during testing and can result in unauthorized asset transfers or policy violations.

What defenses exist for securing AI agents in DeFi?

Emerging defenses include runtime monitoring, anomaly detection, prompt sanitization, signed prompt registries, scoped execution permissions, and agent identity management with role separation.

How is Spearbit helping secure DeFi AI systems?

Spearbit collaborates with organizations to red team agents, simulate adversarial scenarios, validate execution boundaries, and stress test behavior before launch, closing the gap between model logic and secure production deployment.