Security reviews represent a critical inflection point in the lifecycle of a smart contract. They validate assumptions, uncover vulnerabilities, and establish trust with users and partners. However, effective reviews do not begin with the code alone. They require a deliberate, well-structured preparation process that sets clear expectations and enables deep, focused review.

We have worked with organizations across reviews, competitions, bug bounties, and more. The difference between high-signal engagements and inefficient ones is almost always rooted in preparation. This guide outlines the core elements engineering teams should establish before initiating a review, and offers clear, actionable recommendations based on our field experience.

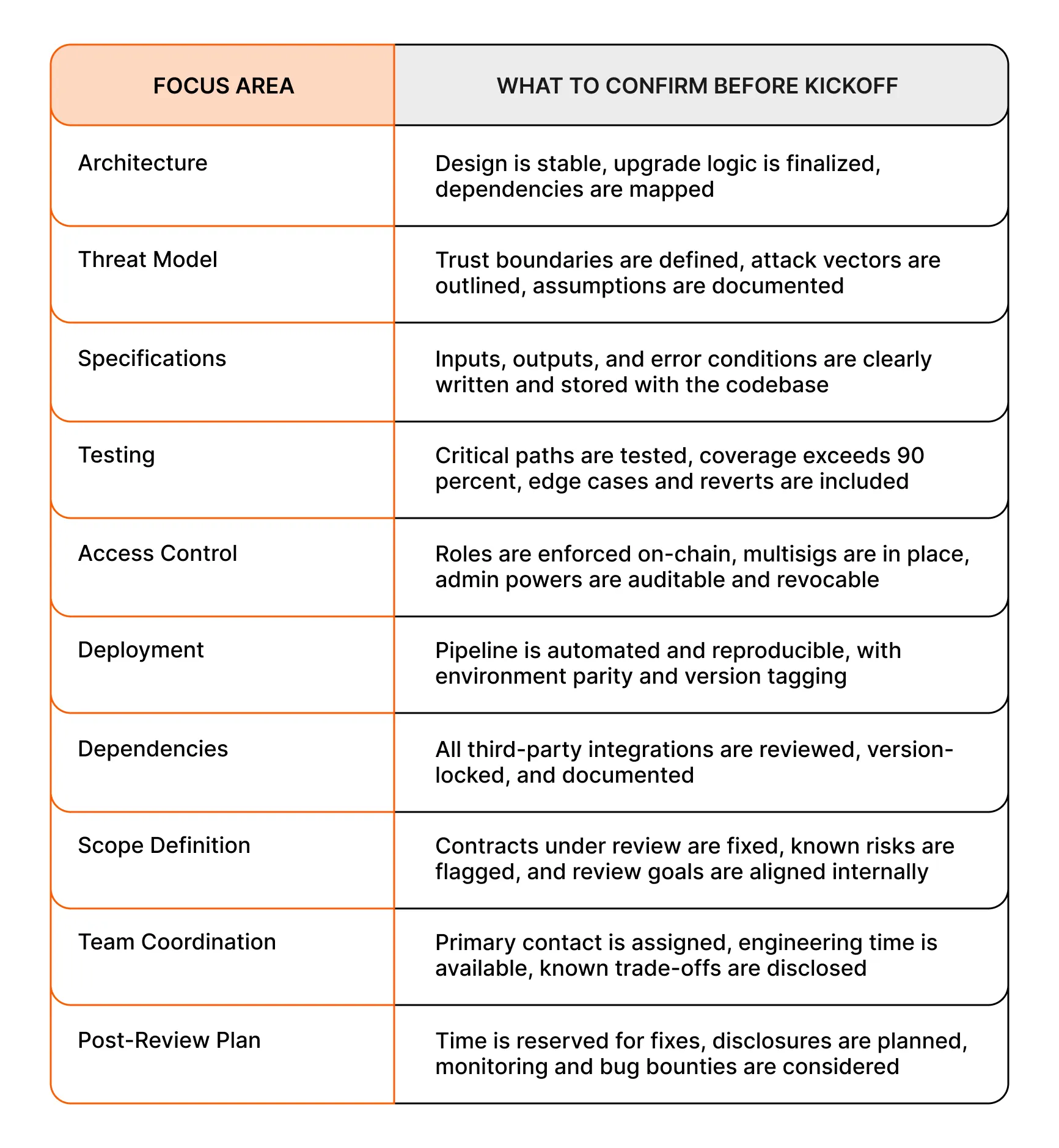

If you need a quick reference, use the table below to align your organization before kickoff, and then download the review readiness checklist here.

Quick Reference: Preparing for Your Smart Contract Review

Finalize the Architecture

Initiating a review while core protocol components are still evolving undermines the process. Frequent changes create misalignment, obscure intent, and waste reviewer effort. A stable, well-defined architecture enables a higher-quality review and better resource allocation.

Your team should ensure that the foundational logic is finalized and internally reviewed, that upgrade mechanisms and proxy patterns are documented, and that external dependencies such as oracles, bridges, or APIs are mapped with clear justifications. Key invariants and trust boundaries must be explicitly defined, and all architectural diagrams should reflect the system as implemented. This clarity minimizes ambiguity and accelerates context-building for external reviewers.

Establish a Threat Model

Researchers do not simply identify bugs; they evaluate whether a system’s design aligns with its stated assumptions and expected behaviors. A documented threat model gives structure to that analysis by highlighting what could go wrong and under what conditions.

Begin by identifying privileged roles, protocol entry points, and assets at risk. Catalog known attack surfaces relevant to your architecture, such as front-running, sandwiching, or re-entrancy. Make assumptions about user behavior and third-party service reliability explicit, and map built-in mitigations to known vectors. Even a concise markdown threat model dramatically improves review precision and shared understanding.

Write Clear Specifications

Specifications bridge the gap between code and intention. They serve both security researchers and internal teams by eliminating guesswork and clarifying functional scope.

Each module or contract should be described in terms of expected inputs, outputs, and behaviors. Define error-handling logic, permission constraints, and invariants. Outline how external systems influence on-chain behavior, whether through governance mechanisms, oracle updates, or keeper bots. Store specifications alongside the codebase to ensure alignment across documentation, design, and implementation. Without this layer of clarity, security researchers are forced to reverse-engineer your goals, often at the cost of accuracy and efficiency.

Build a Reliable Test Suite

Reviews are not a substitute for robust internal testing. A mature test suite helps reviewers confirm behavior, validate assumptions, and focus on higher-order issues.

Your tests should cover all critical paths through both unit and integration tests. Provide evidence of strong coverage metrics, ideally exceeding ninety percent for scoped contracts. Include fuzzing and invariant testing where appropriate, particularly for systems with complex economic logic or multi-step flows. Ensure reverts, boundary conditions, and failure modes are observable through automated testing. Clean continuous integration outputs and reproducible environments allow researchers to trust results and reduce setup friction.

Secure Access Controls and Admin Logic

Access control failures are among the most impactful vulnerabilities found during reviews. Role definitions, privilege boundaries, and escalation procedures must be rigorously defined and enforced.

Confirm that all role assignments are expressed in code and supported by documentation. Ensure governance parameters and upgrade paths are protected by multisigs, timelocks, or staged approvals. Define procedures for key rotation and revocation. Reviewers assess not only who has access to what, but also how easily those permissions can be escalated or abused. Access control logic that is unclear or inconsistent is treated as a primary risk factor.

Prepare for Deterministic Deployment

Security researchers routinely simulate and reproduce on-chain behavior to validate contract state transitions. To support this process, deployment pipelines must be deterministic, transparent, and well-documented.

Ensure deployment scripts are reproducible and clearly annotated. Separate environments for staging, testnet, and production should be documented along with corresponding contract addresses and initialization parameters. If proxies or upgradable patterns are used, define the flow of control and the location of logic contracts. When deployments cannot be replicated or traced, review scope and accuracy are compromised.

Dependencies Before the Review Begins

A substantial share of critical vulnerabilities arise not from your contracts but from the third-party dependencies they rely on. These relationships must be disclosed and accounted for before the review begins.

Maintain a comprehensive list of libraries, token contracts, bridges, and oracle systems integrated into your codebase. Lock versions to avoid regressions or unknown changes. Clearly indicate which contracts are externally owned or inherited from open-source repositories. If any dependency has not been independently reviewed, flag that risk explicitly. The review process must be scoped around known and observable components, not hidden dependencies.

Define Scope and Engagement Parameters

Unscoped or loosely scoped reviews often yield findings that are irrelevant, duplicative, or superficial. Clearly defining what is in and out of scope maximizes reviewer focus and ensures your team receives feedback that aligns with your priorities.

Provide a commit hash that anchors the review to a known code state. List all modules, contracts, and scripts under review. Flag known areas of concern, such as incentive mechanisms, oracle updates, or staking logic. Let researchers know which issues matter most to you, whether re-entrancy, gas optimization, privilege escalation, or invariant violations. When the scope is clear, time is well spent.

Align Your Team for the Review Window

These types of engagements are collaborative. Reviewer efficiency depends on timely communication and shared context. Internal alignment ensures that your team can support the process with minimal overhead.

Before kickoff, designate a single technical point of contact who is deeply familiar with the codebase. Ensure engineering bandwidth is available to address questions, deploy fixes, or clarify edge cases. Schedule working windows that account for time zone differences if your researchers are distributed. If there are known risks or design trade-offs, raise them early to prevent misunderstanding later.

Explore Next Steps

A well-prepared security review improves issue coverage, reduces coordination delays, and increases the impact of reviewer insights.

To baseline your architecture, development readiness, and internal alignment, download our field-tested checklist below.

Then use the Scope Builder to define a tailored engagement structure for your system.

Download the Smart Contract Review Checklist

An actionable list to prepare for a smart contract review and align your team before kickoff.

Plan for Post-Review Work

Establish a timeline for triaging and addressing issues based on severity. Coordinate disclosure strategy with your researchers if findings will be published. Configure monitoring and alerting for post-deployment behavior, including unexpected access patterns, paused contracts, or transaction anomalies. Consider launching a bug bounty or code competition to maintain a forward-looking security posture. For systems with upgradeable components, schedule follow-up reviews to maintain coverage across protocol iterations.

Cantina supports organizations before, during, and after reviews. Contact us to get started.