Beyond Black Box Fuzzing: Spearbit’s Expert-Led Security Approach

Fuzzing is a powerful, chaos-driven testing technique that plays a vital role in modern software security. By feeding applications malformed or unexpected inputs, it uncovers hidden vulnerabilities - from simple bugs to critical flaws. While many tools rely on generic automation, Spearbit’s approach combines fuzzing automation with expert-driven manual review, tailored to the specific architecture and threat model of each target system.

Each campaign begins with a deep technical assessment, where researchers identify high-risk areas and design custom fuzzing harnesses and targeted test scenarios. This ensures comprehensive exploration, including rare edge cases that off-the-shelf tools often miss.

This guide breaks down how fuzzing works, explores real-world examples, outlines core strategies, and offers actionable advice to integrate fuzzing into your development workflow.

How Fuzzing Works

At its core, fuzzing injects unpredictable data into software to trigger unexpected behavior. Inputs may include corrupted payloads, malformed files, or boundary-case parameters. Crashes, memory leaks, and unhandled exceptions often signal deeper security flaws. Over time, fuzzing has uncovered some of the most impactful software vulnerabilities, cementing its role in the security toolkit.

However, not all fuzzing is created equal. Many tools rely on prebuilt test cases and broad scanning, often missing architecture-specific flaws. Spearbit closes this gap by combining automation with manual expertise. After an in-depth architectural review, our team builds customized fuzzing infrastructure, tailored harnesses and inputs aligned with each system’s unique design and risk profile.

This hands-on approach continues post-discovery. Once fuzzing campaigns identify vulnerabilities, Spearbit conducts root-cause analysis, offers remediation guidance, and re-tests to validate fixes, creating a feedback loop that promotes long-term resilience, not just quick patching.

“One of the biggest advantages of working with a strong team is how much time we save on manual review - because we can rely on skilled engineers during the fuzzing process. For Optimism, for example, I didn’t officially need to do a manual review. It was more effective to focus on setting up the fuzzing tools. The two approaches complemented each other perfectly: manual review caught issues I would have missed, and the fuzzer found bugs that manual review alone wouldn’t have uncovered.” (said 0xicingdeath, security expert at Spearbit)

Spearbit’s Method

Spearbit’s differential fuzzing setup was tailored specifically for this environment. The team implemented a custom harness to simulate syscall inputs and monitor register state transitions. Instrumentation allowed the fuzzer to assert equivalence between implementations on every execution. Once the inconsistency was detected, Spearbit researchers performed root cause analysis, constructed a reproducible test case, and delivered a fixed recommendation with risk categorization.

This engagement demonstrates the necessity of advanced fuzzing strategies for identifying emergent behavior. It also reflects Spearbit’s core strength: combining dynamic analysis with expert triage to validate the integrity of multi-environment systems with precision.

Types of Fuzzing

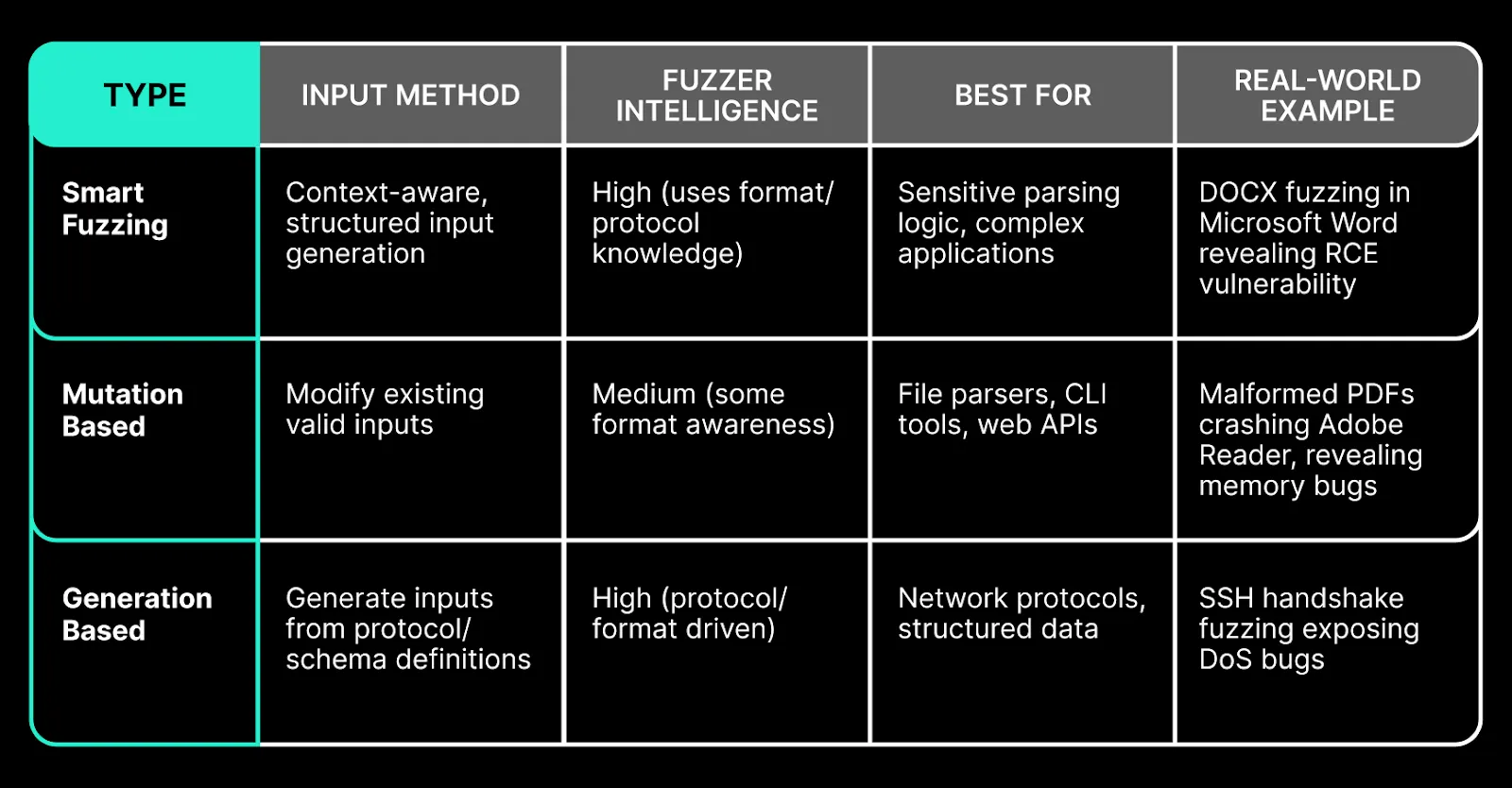

Fuzzing isn't a one-size-fits-all technique, there are several approaches, each suited to different testing goals and target systems. Understanding the differences between smart and dumb fuzzers, as well as key strategies like mutation-based and generation-based fuzzing, is crucial for choosing the right method. The table below summarizes these fuzzing types, highlighting how they work, where they’re most effective, and real-world examples of their impact.

Example with Differential Fuzzing: Revealing Cross-Implementation Semantic Inconsistencies

In a recent engagement, Spearbit applied a differential fuzzing strategy to validate the consistency of two implementations of the same execution logic. One version operated on-chain, the other off-chain. Both were intended to be functionally identical. The goal was to identify subtle inconsistencies that would not surface through static analysis or manual review alone.

Objective: The fuzzer was configured to generate randomized inputs representing low-level syscall sequences. These inputs were simultaneously passed to both implementations. The output of each execution was monitored at the register level to verify that both environments either produced identical results or failed in the same manner.

Discovery: After an extended test cycle, the fuzzer identified a semantic divergence caused by the use of the bitwise NOT operator on a padded uint256 value. Although both implementations performed what appeared to be equivalent logical checks, their behavior diverged due to environment-specific interpretation of bitwise operations.

Off-Chain Implementation

On-Chain Implementation (EVM)

In the off-chain environment, the expression evaluated predictably, yielding a boolean outcome resulting in either 0 or 1. In contrast, the EVM’s not opcode performed a full-width bitwise inversion of the 256-bit input, returning uint256.max. This caused the conditional logic to differ, resulting in divergent register states and inconsistent downstream behavior.

Impact: This inconsistency would have been virtually undetectable through manual code review. It required stochastic input generation, real-time output comparison, and architectural understanding of both execution environments. The divergence posed a significant correctness risk, particularly in protocols dependent on deterministic cross-layer execution.

Why Developers and Organizations Need Fuzzing

As software systems grow in complexity and integration, so does their attack surface. Traditional testing—manual reviews, linters, static analysis—struggles to keep up with evolving threats. Fuzzing addresses this gap by automatically generating unexpected inputs to expose vulnerabilities that other methods often miss.

Fuzzers are exceptional for:

- Uncovering Hidden Bugs: They reveal edge cases, hidden assumptions, and logic flaws overlooked in code reviews and unit tests.

- Scaling Security Testing: Unlike manual methods, fuzzers continuously and efficiently probe massive codebases, surfacing critical issues quickly.

- Catching Dangerous Vulnerabilities: From denial-of-service threats to arithmetic overflows, fuzzers help prevent real-world exploits.

- Legacy and Regression Detection: They rediscover bugs in old code and verify that past issues remain fixed as software evolves.

- Differential Testing: In multi-implementation systems like EVMs, fuzzing uncovers inconsistencies across languages or platforms.

Fuzzers never sleep, they can run 24/7. But effective use requires thoughtful configuration: setting iteration goals, monitoring progress, and evolving inputs to ensure broad and meaningful coverage. Fuzzing doesn't replace manual review, but it supercharges it. When integrated into a broader security strategy, it helps teams find more bugs, faster, and with fewer resources.

A Security Leap Forward: Spearbit’s Advanced Fuzzing Approach

Spearbit stands apart by delivering a unique fuzzing approach designed to tackle the challenges of decentralized systems. Starting with an in-depth architectural assessment, Spearbit’s team crafts custom fuzzing harnesses and attack vectors that target the most critical and vulnerable components of your protocol or application.

Spearbit’s key benefits include:

- Protocol-aware fuzzing across multiple languages: Supports Solidity, Rust, Go, Cairo, and more for comprehensive blockchain stack coverage, from smart contracts to node clients and off-chain services.

- Adaptive, feedback-driven mutation: Real-time input refinement based on code coverage and execution feedback uncovers deep, stateful bugs like reentrancy and logic inconsistencies.

- Detailed findings with actionable insights: Every vulnerability comes with reproducible test cases, risk scoring, and visual coverage reports to guide efficient remediation.

- Continuous retesting and verification: Beyond discovery, Spearbit ensures fixes are effective with ongoing validation cycles, moving past the typical report-and-patch model.

- Expert, community-powered research: Leveraging a curated network of elite security researchers, Spearbit blends adversarial expertise with automated tools for maximum impact.

Combined with broader security measures, like threat modeling, audits, and formal verification, Spearbit’s hands-on, architecture-aware fuzzing equips developers to identify and fix vulnerabilities early and continuously. In a future of evolving threats like zero-knowledge apps and AI-driven protocols, Spearbit provides the adaptive, intelligent fuzzing necessary to stay ahead of attackers and protect critical blockchain infrastructure.

Practical Use Cases for Developers

Spearbit offers developers a powerful platform to perform advanced fuzz testing on smart contracts, helping identify security flaws before they reach production. Below are some concrete applications where Spearbit excels:

Example:

Fuzzing a DeFi lending protocol could reveal hidden issues in interest rate calculations or privilege escalation through borrower roles. With Spearbit, developers can identify and patch these flaws before users are impacted.

Common Pitfalls and How to Set Up for Success with Spearbit

Set Up for Success: To maximize the effectiveness of fuzz testing with Spearbit:

- Target Critical Functions: Focus fuzzing on areas with the most security impact, such as fund transfers and role-based access control.

- Continuous Execution: Run fuzzers for extended periods to enhance the mutation process and catch deeper bugs, though this often requires significant manual tweaking to optimize results.

- Layered Security Approach: Use Spearbit alongside static analyzers and formal verification tools for a comprehensive security audit.

Common Pitfalls: Avoid these mistakes to get the most value from fuzz testing:

- Ignoring False Positives: Every flagged anomaly should be reviewed. False positives may obscure real vulnerabilities.

- Overlooking Environment-Specific Bugs: Make sure to test under realistic network conditions and block timing scenarios.

- Equating Coverage with Security: High coverage, especially line or statement coverage, is desirable but doesn’t guarantee security. While it should guide your testing efforts, branch coverage can offer more insights into control flow and correctness.

Future of Fuzzing in Decentralized Environments

The evolution of fuzzing is intertwined with the progress in blockchain development and security research. One key direction involves the emergence of autonomous fuzzers, systems that dynamically adjust their strategies based on how a contract behaves, enabling them to identify and target vulnerabilities with minimal manual input.

Another frontier is the integration of artificial intelligence. Machine learning models are being trained to anticipate the most effective mutation strategies, dramatically improving the speed and accuracy with which high-impact bugs are uncovered.

As technologies like zero-knowledge applications (zkApps) and rollups gain traction, fuzzers are also evolving to handle these new paradigms. This includes testing the integrity of zero-knowledge proofs and the nuanced behaviors of Layer 2 interactions.

Looking further ahead, we can expect fuzzing tools to become deeply integrated with formal verification methods, such as symbolic execution engines and cryptographic proof systems, creating a more comprehensive and robust security framework.

Get a Tailored Quote in 24hs

Spearbit offers comprehensive, end-to-end security services tailored to the unique needs of decentralized systems. Ready to protect your protocol? Let's talk. We can deliver a detailed quote within 24 hours, customized to your stack and timeline.